diane.johnson | March 25th, 2021

Joni Mitchell introduced her song, “Clouds” or “Both Sides Now,” by saying:

This is a song that talks about sides to things. In most cases there are both sides to things and in a lot of cases there are more than just both…

But in this song there are only two sides to things…

What we’re taught to believe things are and what they really are. (reference)

Looking at cloud threats from both the attacker’s side and the user’s side can be helpful in today’s development lifecycles. Development practices are enhanced by adding a twisted perspective.

Both Sides of our Users’ Stories

- “What we are taught to believe things are” is our User Story or expected experience. These nuggets are the existing storyboard today; they are part of your software development lifecycle.

- “What they really are” is our Abuse Story or unexpected experience. These nuggets come from security incidents and attack analysis trenches.

Establishing Acceptance Criteria for Both Sides

Development practices include acceptance or success criteria that are used to help define the outcome for the Abuser and the User. Example acceptance criteria for Abuser story might include:

- I know I am done when the new code/configuration/events preventive hooks are triggered for the specific attack pattern.

- I know I am done when triggered detection completes end-to-end workflow including tickets created, notifications sent, response actions completed.

- Keep using the typical technical and business acceptance criteria normally in play.

Sounds easy, right? Here is how to break it down:

- Begin by leveraging good solid frameworks used by Security Professionals.

- Build out a backlog, resource it, prioritize it, and adjust every sprint.

Whether the scope of that backlog is what an Individual Contributor is accountable for or as a team backlog or as a Portfolio of Threat Landscape Epics, the concept includes dynamic solutions and agility that keeps pace with the threat actors.

Frameworks for this approach include:

- MITRE ATT&CK Framework is instrumental in structuring the attack patterns in a knowable interactable taxonomy. Use tactics as “Features.” Most security tools are adapting to this framework for detection and response activity (D&R) and correlating development efforts with coverage in place keeps the eye on what is needed.

- Leffingwell’s Scaled Agile is enhanced by adding a Threat Landscape solution train to the portfolio. There are options here; the goal is to get the threat dev cycles into and prioritized with the normal dev work. The focus of the Threat Landscape solution train is continuous evolution of prevention and detection mechanisms across development platforms and software codebase.

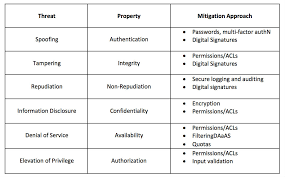

- Threat Risk Modeling methods can help the development conversations with the wider audience of dev teams. By adapting the categories of Spoofing, Tampering, Repudiation, Information Disclosure, Denial of Service, and Elevation of Privileges or STRIDE or other risk methodology; one is able to engage in consistent and repeatable discussions about attack patterns and specific threats for which we want to build better defenses. A cheat sheet of terms helps everyone out here.

- Workflow Orchestration is foundational to accomplishing threat triage at scale. The ability to turn a brainstormed playbook into a visual flow of steps taken by automation is a powerful capability. Enter your Security Orchestration, Automation, and Response (SOAR) platform - not quite a framework but rather the glue that holds the frames together.

An Example

Let’s examine both sides of cloud services for a single feature development cycle. Since much is published about how the Agile processes work, the focus in the example below will be on options one can use to layer in threat analysis.

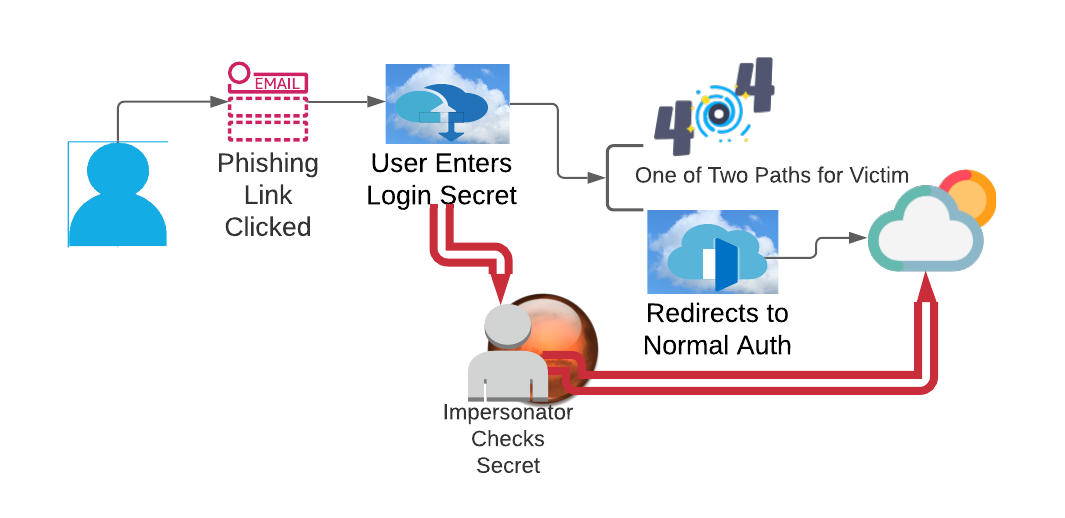

One common cloud threat is access to an account after being phished. Lots of root causes here and solutions will be different based on your scope of accountability. When investigating a potentially phished end user, one will often see a connection from some far away location right after a successful phish. For some phishing campaigns the technique of confirming credentials is like clockwork. This is one of the threat characteristics we want to detect and prevent further misuse of by threat actors.

Picture This

An end user opens an email message, clicks the link, enters their login information, and it doesn’t work - the page is either 404 not found or the normal login page displays. So the end user logs in the normal way successfully from their normal location.

What was wrong with the picture? The attacker used the valid account, confirmed access, and no one is the wiser. The impersonator captured the login information and successfully used them from Mars. What else? Time between Mars and Earth is a little more than a few seconds. What else? Did the user see MFA or a note about logging in from Mars?

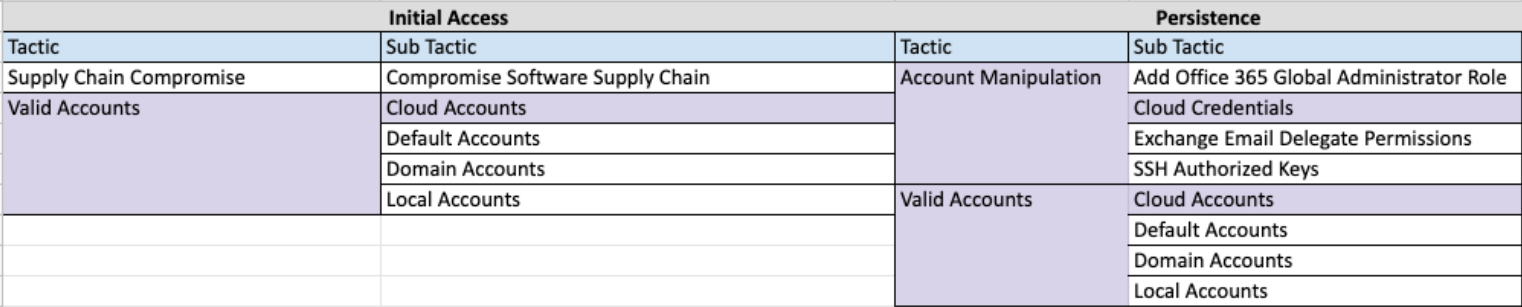

In this example, MITRE references Valid Account tactic and Cloud Accounts/Credentials sub tactic used by threat actors for both Initial Access and Persistence. Arranging features to align to this structure gives consistency to the process.

The Epic for our example is “Infrastructure Abuse of Valid Accounts”:

- Feature 1: Account Recon Detection & Response (D&R)

- Feature 2: Account suspicious indicator (location, device, time) Detection & Response (D&R)

Framing the Features from Both Sides

User Story - What we are taught to believe things are:

As an end user, I want secure authentication to my cloud resources.

User Story Twist-I want to avoid exposing my own secret credentials; however, when things go wrong, I want to know when a threat actor has social engineered and used my credentials so that I can take appropriate response actions.

Abuse Story - What they really are:

As an attacker, I want to obtain and confirm credentials are owned.

As a defender, I want to detect, deter, and notify when authentication anomalies are within scope and triage those suspicious events for known and knowable threat tactics, techniques, and indications of compromise.

Acceptance criteria for addressing the stories is determined through discussions and then used to build out your test cases. Ideas for this attack pattern might include:

- Operations - I know I am done when automation steps are executed with expected outcomes for each input. (aka the normal stuff you are building for and testing to affirm)

- Prevention - I know I am done when the login is not allowed when the end user communications are outside of normal parameters-risk score conditions may include impossible time travel events, unenrolled device, multiple attempts within x time. Next step in login flow is honored and executed such as an extra MFA request or additional triage of IP reputation.

- Prevention - I know I am done when Threat Actor is not presented with useful information about either the individual account or the infrastructure through performing attempts to sign in with the newly phished credential. In the old days this was simply not returning error codes that told the bad guy about success/failure which led to mapping the directory of the service. Now - there may be more to keep from the bad guys eyes like what controls are in use.

- Detection - I know I am done when alert notification is generated and completes the appropriate pipeline to delivery. The end point of the notification and playbook of actions will vary and typical alerts might be sent to a Security Operations Center, a Customer Administrator, or even an end user themself with guidance for self-remediation.

- Operations - I know I am done when tickets are created by the pipeline with sufficient detail for Analysts to investigate and action. This will take use of SOAR to perform repeatable steps and gather enriched facts about the situation that are added to the tickets.

During the development discussions, the threat is dissected for understanding of expected patterns and what an attacker can really do. Threat Operation professionals go into their conversations with knowledge of the threats and details around execution of attacks and they are held accountable for gaining this insight for the greater team. Technical dependencies are identified and the fine details are filled in.

Using a risk modeling methodology like STRIDE, the solution patterns and approaches pop out; the team determines needs for monitoring, logging, alerting of each threat and devise defenses and mitigation strategies. This example identifies a spoofing threat - mitigation authentication approach of MFA or digital signatures would be the starting point of development initiatives. Post 2020 attacks, there are more approaches to include.

The team takes the stories and success criteria and schedules out the work within the backlog. Prioritization is influenced by relevant facts about the current threat landscape trends and trends of what is encountered in incidents as measurements. The beauty of basing your portfolio design on MITRE ATT&CK tactics and techniques is that the detection and alerting security tooling is also leveraging this framework and all things simply tie together.

That’s how one can look at cloud threats from both sides.